Media Coverage

AI “Black Box” Placed in More Hospital Operating Rooms to Improve Safety

Jan 16, 2024

Ars Technica

Image Source:

Surgical Safety Technologies

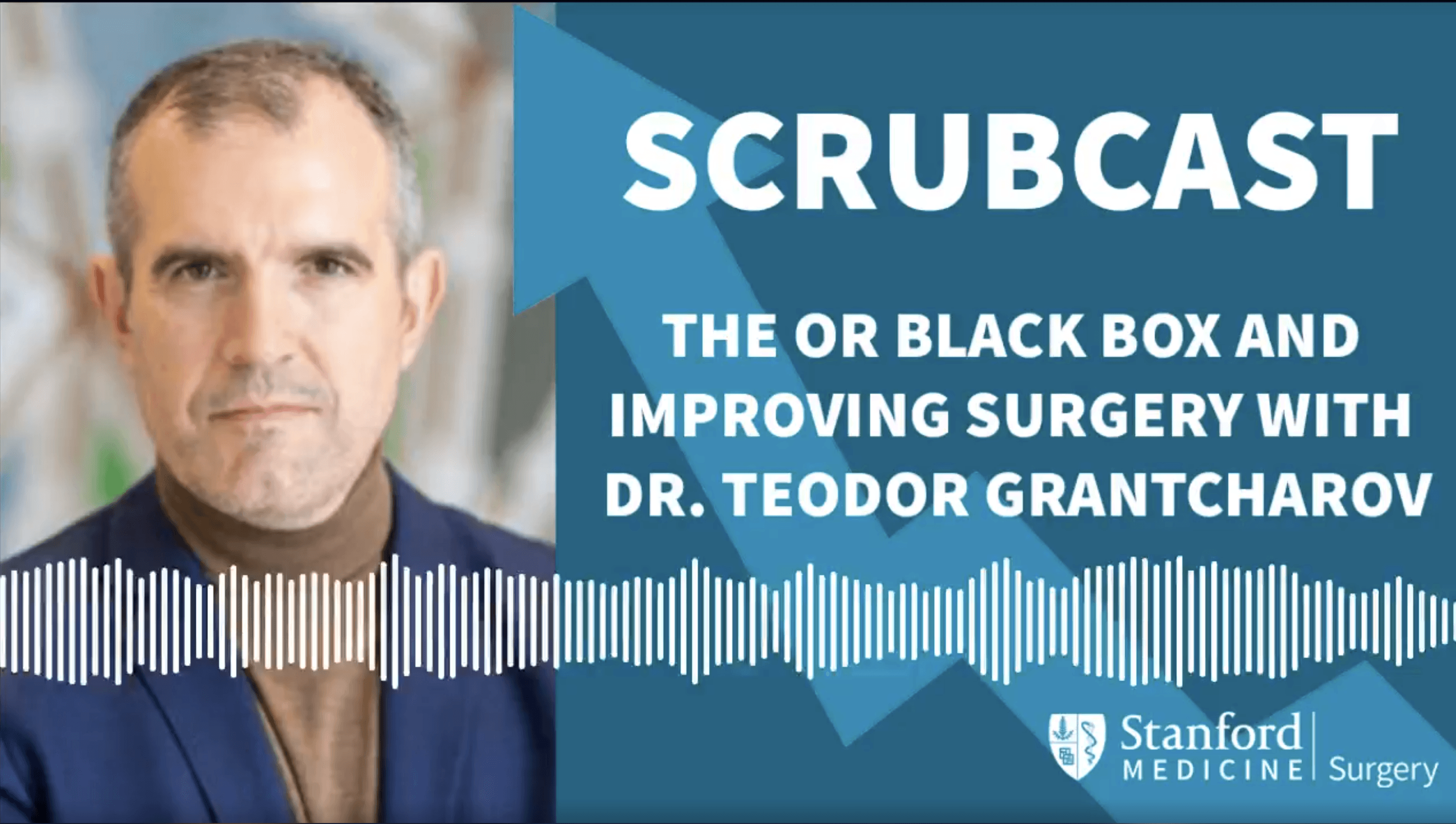

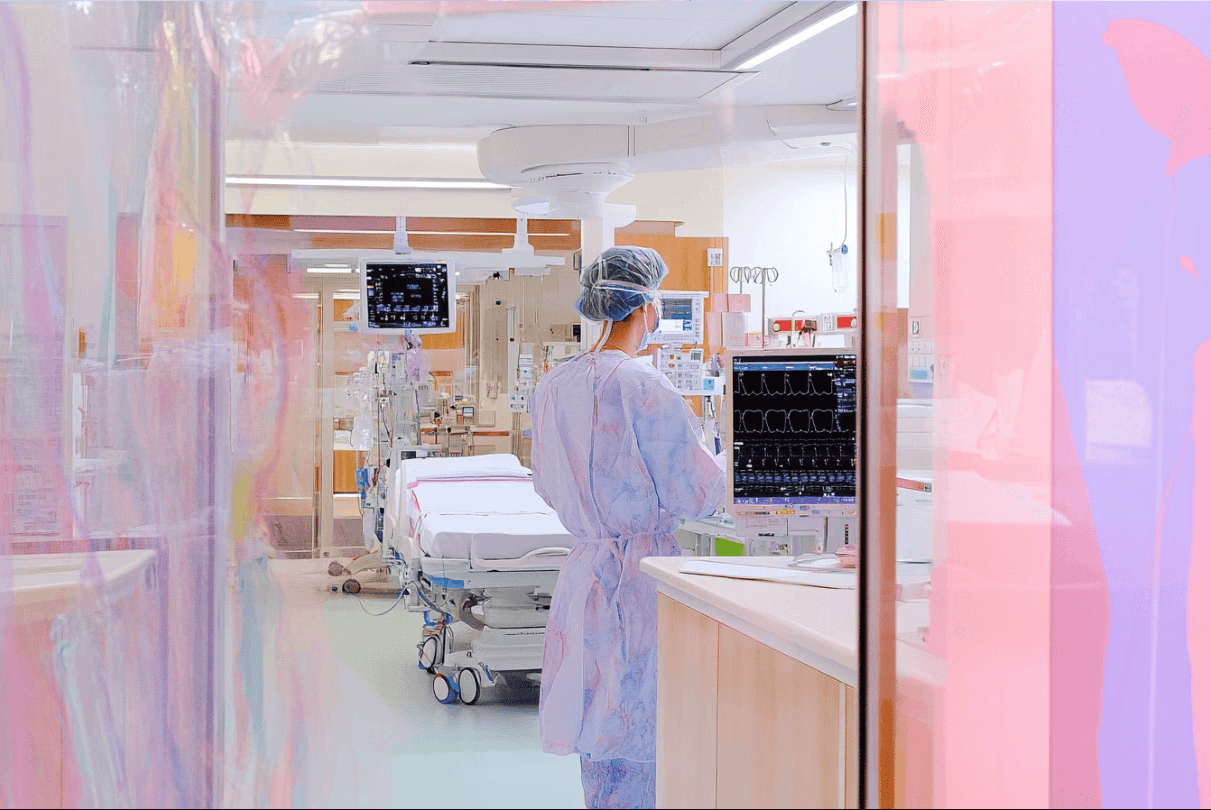

AI-powered surveillance technology is increasingly being integrated into hospital operating rooms across the U.S. and Canada to enhance surgical safety and efficiency. Known as the OR Black Box®, this system utilizes wide-angled cameras and advanced AI to collect and analyze audio, video, and vital sign data during procedures. Currently implemented in over two dozen hospitals, including Brigham and Women's Faulkner Hospital, the OR Black Box aims to prevent surgical mishaps rather than assign blame, anonymizing staff by blurring faces and cartoonifying bodies. Insights gleaned from the data have led to significant improvements in protocol adherence and operational efficiency, as evidenced by Duke Health’s findings on patient preparation practices.

However, the introduction of this technology has sparked concerns among healthcare workers regarding potential misuse of the recorded data, particularly in disciplinary actions or malpractice cases. Staff are worried that their identities may not be adequately protected, raising ethical questions about surveillance in high-stakes environments. Legal experts note the uncertainty surrounding how this data might be utilized in litigation, highlighting the need for clearer guidelines. Despite these concerns, early adopters like Mayo Clinic report positive outcomes, citing improved teamwork and operational adjustments based on data analysis, underscoring the ongoing debate between technological advancement and the preservation of a supportive work environment.

PUBLISHED BY

NEWSROOM